I.S.K. architecture

Tom Scott

Introduction to Apache Iceberg

Apache Iceberg is an open table format designed for large-scale, high-performance analytics on big data. It was built to address the limitations of older table formats like Hive and to support modern data lake architectures.

In this article we'll ake a deep dive into I.S.K. (Iceberg Service for Kafka), an Iceberg projection over real-time, event-based data stored in operational systems.

Materialized at runtime, ISK allows for the decoupling of the physical structure of the data from the logical, table-based representation.

With I.S.K., you can:

- Access up-to-date (zero latency) data from analytical applications.

- Impose structure on read in your analytical applications.

- Logically repartition with no physical data movement.

- Surface multiple “views” on the data with different characteristics defined at runtime.

- Take advantage of analytical practices such as indexing, column statistics, etc., to achieve massive performance enhancements.

High-Level Architecture

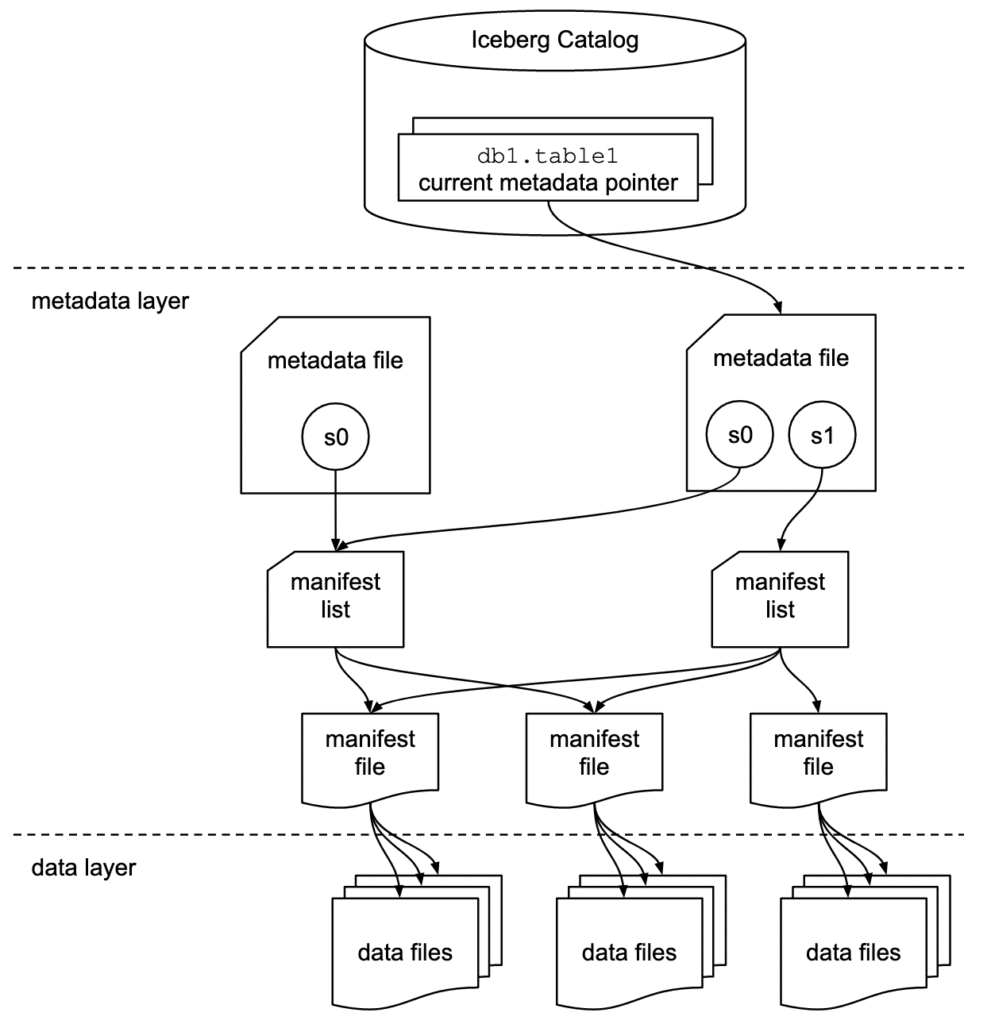

Iceberg Architecture Duality

| Iceberg Unit | I.S.K. Unit |

|---|---|

| Iceberg Catalog | Custom REST Iceberg Catalog implementation that generates metadata files on the fly during the LoadTable call based on the underlying streaming system topic. |

| Metadata File | Metadata file content is returned by the catalog during the above operation—metadata files are ephemeral and not stored persistently. |

| Manifest List | Ephemeral manifest list with references to manifest files. |

| Manifest File | Ephemeral manifest file — computed at request time with data splits relevant to the query. Enables per-query optimization, efficient pruning, and schema-on-read. |

| Data File | Ephemeral data files — served via an S3-compatible interface but are actually data read directly from the source streaming system with per-request pruning/splits. |

Stream Table Duality

I.S.K. maps common streaming concepts to Iceberg-specific terms, enabling seamless representation of event streaming data (e.g., from Apache Pulsar or Apache Kafka) in Iceberg-compatible systems and tools.

| Kafka Unit | I.S.K. Unit | Description |

|---|---|---|

| Cluster | Namespace | Each Kafka or Pulsar cluster is represented as an Iceberg namespace to indicate the separation of resources. |

| Topic | Table | As logical collections of data points, both topics and tables are equivalent. |

| Message | Row | An individual message within a topic can be viewed as a row within a table when combined with a schema. |

| Message field | Column | A field within an event message is similar to a column within a table row in Iceberg. |

Indexing

Consumers of event streaming systems and analytical tools that consume Apache Iceberg tables have vastly different performance requirements:

- Streaming systems iterate through a small volume of messages quickly.

- Analytical systems perform less frequent but higher-volume reads.

I.S.K. bridges this gap using indexing techniques that selectively read only relevant data for a query.

Example: For the predicate WHERE customer_name = 'X', I.S.K. indexing drastically reduces the number of messages by pruning to only those that match the predicate.

Note: This is not an exhaustive list of I.S.K.'s performance-enhancing techniques, which continue to evolve.

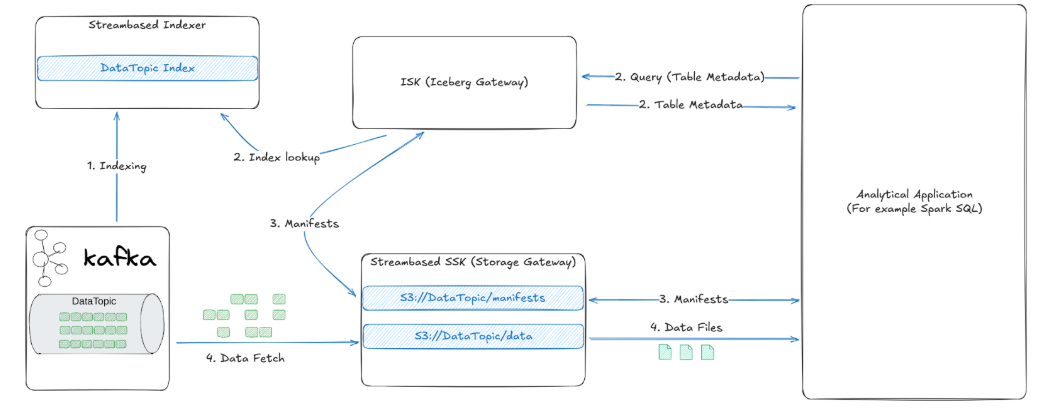

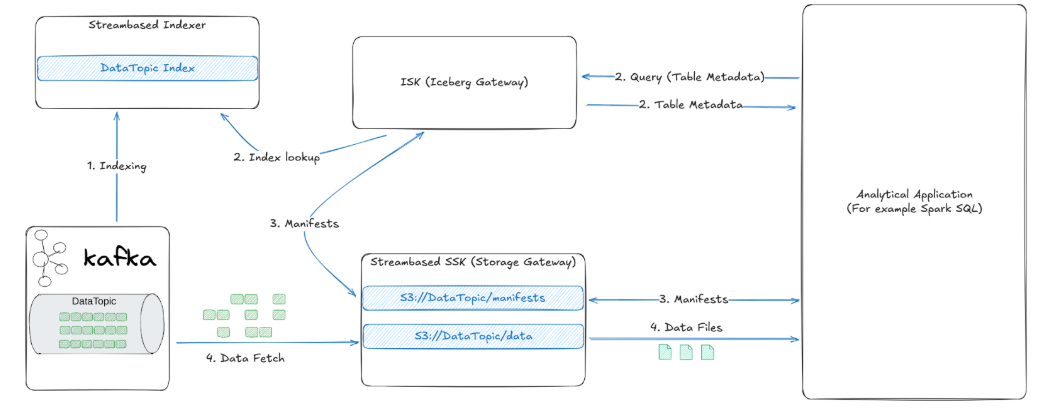

Logical Architecture Diagram

Request Processing Flow

- Indexing

- Background indexing of data in the streaming system — maintaining up-to-date indexes.

- Table metadata

- Analytical application issues a query (e.g.,

SELECT … FROM DataTopic WHERE customer_name = 'X'). - Analytical application queries I.S.K. for table metadata.

- I.S.K. generates table metadata at runtime by querying the stream-based indexer service.

- I.S.K. returns the metadata, including references to manifest files (e.g., S3 paths).

- Manifests

- Analytical application reads manifest files directly from the storage service (SSK).

- SSK queries I.S.K. for manifest files — I.S.K. generates manifests with partitions/splits for efficient pruning.

- SSK returns the manifests to the analytical application.

- Analytical application uses the manifest to locate data files.

- Data files

- SSK fetches the relevant data from the source streaming system, applying skips/splits for pruning.

- SSK aggregates events into batch data files in memory (streaming fashion; files not held fully in memory).

- SSK returns the batched data files to the analytical application.

Summary

I.S.K. (Iceberg Service for Kafka) bridges the gap between real-time event streaming and modern analytical workflows by providing a logical, Iceberg-compliant interface over operational data systems. By materializing metadata and data views on the fly, I.S.K. enables zero-latency access to streaming data using familiar analytical tools and paradigms. Through ephemeral metadata, runtime partitioning, and indexing techniques, it delivers powerful performance optimizations without requiring changes to the underlying streaming infrastructure.

Whether you're looking to unify streaming and batch processing, simplify your analytical data architecture, or unlock real-time insights without data duplication, I.S.K. offers a scalable and elegant solution built on the foundations of Apache Iceberg.